Light Field Super-Resolution using a Low-Rank Prior and Deep Convolutional Neural Networks

|

R. Farrugia, C. Guillemot,

"Light Field Super-Resolution using a Low-Rank Prior and Deep Convolutional Neural Networks", IEEE Trans. on Pattern Analysis and Machine Intelligence, to appear, 2019.(pdf) Collaboration with Prof. Reuben Farrugia, University of Malta. |

Abstract

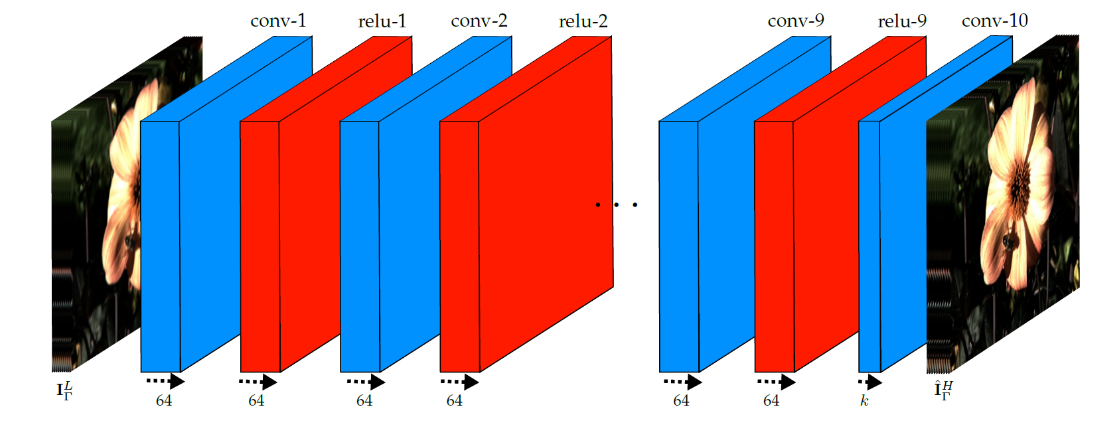

Light field imaging has recently known a regain of interest due to the availability of practical light field capturing systems that offer a wide range of applications in the field of computer vision. However, capturing high-resolution light fields remains technologically challenging since the increase in angular resolution is often accompanied by a significant reduction in spatial resolution. This paper describes a learning-based spatial light field super-resolution method that allows the restoration of the entire light field with consistency across all sub-aperture images. The algorithm first uses optical flow to align the light field and then reduces its angular dimension using low-rank approximation. We then consider the linearly independent columns of the resulting low-rank model as an embedding, which is restored using a deep convolutional neural network (DCNN). The super-resolved embedding is then used to reconstruct the remaining sub-aperture images. The original disparities are restored using inverse warping where missing pixels are approximated using a novel light field inpainting algorithm. Experimental results show that the proposed method outperforms existing light field super-resolution algorithms, achieving PSNR gains of 0.23 dB over the second best performing method. This performance can be further improved using iterative back-projection as a post-processing step.

Results

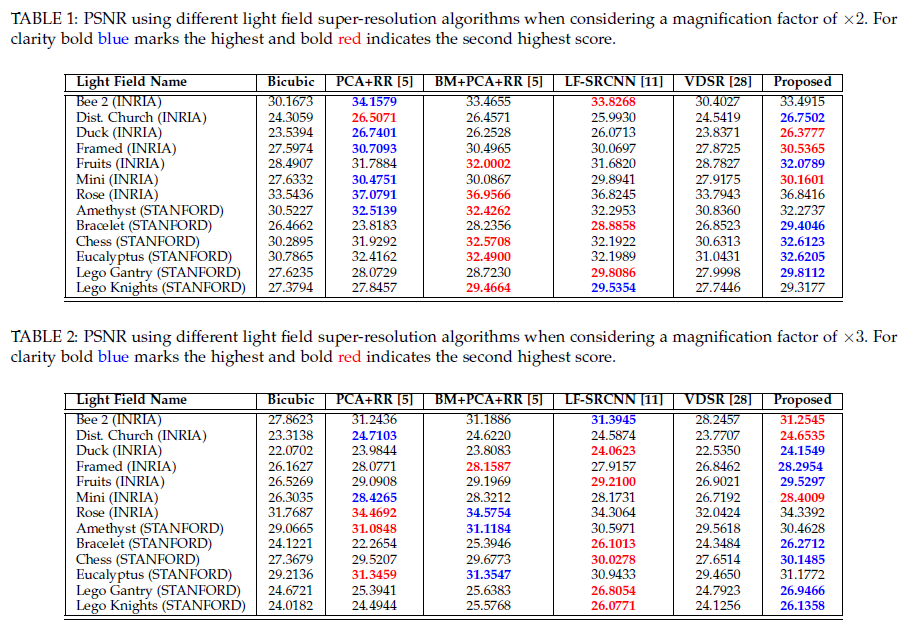

In all the experiments we degrade each sub-aperture image using a

Gaussian filter of size 7x7 with a standard deviation of 1.6 followed by

downscaling. We train our deep learning method using 98 light fields

from the EPFL, INRIA and HCI datasets and tested on a different set of

light fields. The proposed method was compared against a number of both

light field and single image super-resolution algorithms. The results in

table 1 show the performance in terms of PSNR where it can be seen that

our method achieves very competitive performance.The video shows the

low-resolution and corresponding light field restored our proposed

method. It can be immediately noticed that our method manages to restore

the texture detail and while preserving the angular coherence.

To play the video click on the image

To play the video click on the image

|

Compare with respect to the original low-resolution light field

To play the video click on the image

Compare with respect to LF-SRCNN

To play the video click on the image