Deep Unrolling for Light Field Compressed Acquisition using Coded Masks

Deep Unrolling for Light Field Compressed Acquisition using Coded Masks

Abstract

Compressed sensing using color-coded masks has been recently considered for capturing

light fields using a small number of measurements. Such an acquisition scheme is very practical, since

any consumer-level camera can be turned into a light field acquisition camera by simply adding a coded

mask in front of the sensor. We present an efficient and mathematically grounded deep learning model to

reconstruct a light field from a set of measurements obtained using a color-coded mask and a color

filter array (CFA). Following the promising trend of unrolling optimization algorithms with

learned priors,

we formulate our task of light field reconstruction as an inverse problem and derive a principled deep

network architecture from this formulation.

We also introduce a closed-form extraction of information from the acquisition, while similar methods

found in the recent literature systematically use an approximation.

Compared to similar deep learning methods, we show that our approach allows for a better reconstruction

quality.

We further show that our approach is robust to noise using realistic simulations of the sensing

acquisition process.

In addition, we show that our framework allows for the optimization of the physical components of the

acquisition device, namely the color distribution on the coded mask and the CFA pattern.

Overview of the method

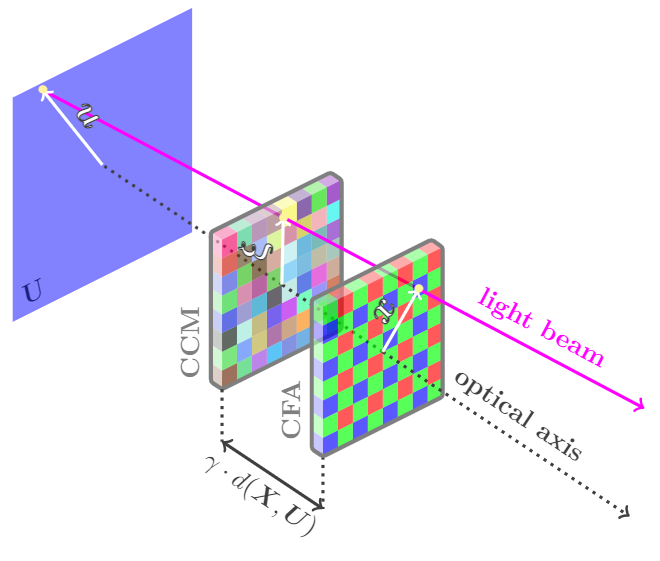

Our method reconstructs light fields that are compressively acquired in a framework using a color-coded mask

and

a color filter array. The light field is first filtered by the color-coded mask, effectively performing a

multiplexing in both the angular and the spectral domains, before

being filtered by the color filter array, placed directly before the sensor. The filtered light field is

subsequently recorded using a traditional monochromatic photosensor.

This framework effectively realizes a linear projection of the light field onto a monochromatic image.

By additionally allowing the sensor to perform a motion of translation, it is possible to record several

shots of the light field, which is usually greatly beneficial to the signal reconstruction quality.

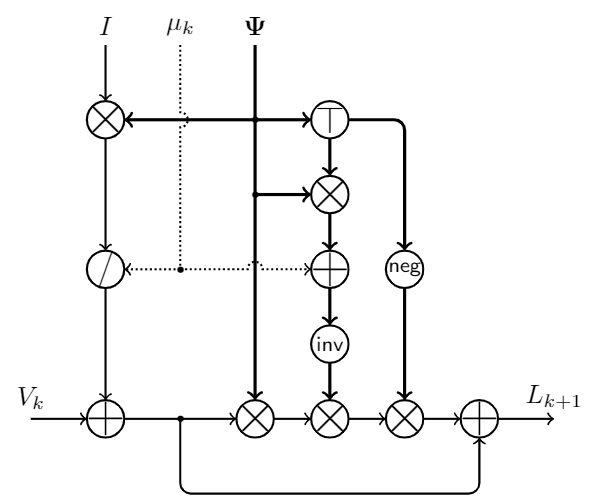

Our architecture then

performs a reconstruction of the full light field using the monochromatic

measurements. The architecture is a deep neural network designed by unrolling the half-quadratic splitting optimization algorithm.

While traditional optimization algorithms generally require a very large number of iterations to converge, in the context of optimization unrolling,

one usually perform a small number of iterations. The neural network obtained by this unrolling performs a reconstruction of the signal by successive refinement of a tentative reconstruction.

In consists of an alternation of data-term minimization layers and learned proximal operator.

The data-term minimization layer enforces the consistency of the intermediate reconstruction with the measures, while the proximal operator can be interpreted either as a denoiser,

or as the projection of a signal onto the sub-manifold of "natural" light fields. The proximal operator is learned in an end-to-end framework.

We design a data-term minimization layer that performs an efficient closed-form solving of a data-fidelity term.

Results on the Kalantari and Stanford dataset

Light fields from the dataset of Nima

Khademi Kalantari and Ravi Ramamoorthi. Deep High Dynamic Range Imaging of Dynamic Scenes, ACM

Transactions on Graphics (Proceedings of SIGGRAPH 2017). and the Dataset of Lytro Illum images by Abhilash Sunder

Raj, Michael Lowney, Raj Shah, and Gordon Wetzstein

Below are videos showing the different sub-aperture images of the reconstructed light fields. We provide videos for our method in the 1-shot and in the 3-shot case. We also compare our method to Nabati, Ofir & Mendlovic, David & Giryes, Raja. (2018). Fast and accurate reconstruction of compressed color light field. and to Guludec, Guillaume & Miandji, Ehsan & Guillemot, Christine. (2021). Deep Light Field Acquisition Using Learned Coded Mask Distributions for Color Filter Array Sensors. in the single-shot acquisition framework, and to Guo, Mantang & Hou, Junhui & Jin, Jing & Chen, Jie & Chau, Lap-Pui. (2020). Deep Spatial-Angular Regularization for Compressive Light Field Reconstruction over Coded Apertures. in the 3-shot acquisition framework.

Below are videos showing the different sub-aperture images of the reconstructed light fields. We provide videos for our method in the 1-shot and in the 3-shot case. We also compare our method to Nabati, Ofir & Mendlovic, David & Giryes, Raja. (2018). Fast and accurate reconstruction of compressed color light field. and to Guludec, Guillaume & Miandji, Ehsan & Guillemot, Christine. (2021). Deep Light Field Acquisition Using Learned Coded Mask Distributions for Color Filter Array Sensors. in the single-shot acquisition framework, and to Guo, Mantang & Hou, Junhui & Jin, Jing & Chen, Jie & Chau, Lap-Pui. (2020). Deep Spatial-Angular Regularization for Compressive Light Field Reconstruction over Coded Apertures. in the 3-shot acquisition framework.

Tulips

|

Nabati et al. Guo et al. |

Le Guludec et al. Ours (3-shot) |

Ours (1-shot) Ground truth |

Buttercup

|

Nabati et al. Guo et al. |

Le Guludec et al. Ours (3-shot) |

Ours (1-shot) Ground truth |

Orchids

|

Nabati et al. Guo et al. |

Le Guludec et al. Ours (3-shot) |

Ours (1-shot) Ground truth |

Seahorse

|

Nabati et al. Guo et al. |

Le Guludec et al. Ours (3-shot) |

Ours (1-shot) Ground truth |

Cars

|

Nabati et al. Guo et al. |

Le Guludec et al. Ours (3-shot) |

Ours (1-shot) Ground truth |

White rose

|

Nabati et al. Guo et al. |

Le Guludec et al. Ours (3-shot) |

Ours (1-shot) Ground truth |

Rock

|

Nabati et al. Guo et al. |

Le Guludec et al. Ours (3-shot) |

Ours (1-shot) Ground truth |