Denoising of 3D Point Clouds Constructed from Light Fields

|

Christian Galea and Christine Guillemot,

"Denoising of 3D Point Clouds Constructed from Light Fields", ICASSP 2019. [PDF] |

Abstract

Light fields are 4D signals capturing rich information from a scene. The availability of multiple views enables scene depth estimation, that can be used to generate 3D point clouds. The constructed 3D point clouds, however, generally contain distortions and artefacts primarily caused by inaccuracies in the depth maps. This paper describes a method for noise removal in 3D point clouds constructed from light fields. While existing methods discard outliers, the proposed approach instead attempts to correct the positions of points, and thus reduce noise without removing any points, by exploiting the consistency among views in a light-field. The proposed 3D point cloud construction and denoising method exploits uncertainty measures on depth values. We also investigate the possible use of the corrected point cloud to improve the quality of the depth maps estimated from the light field.

Algorithm overview

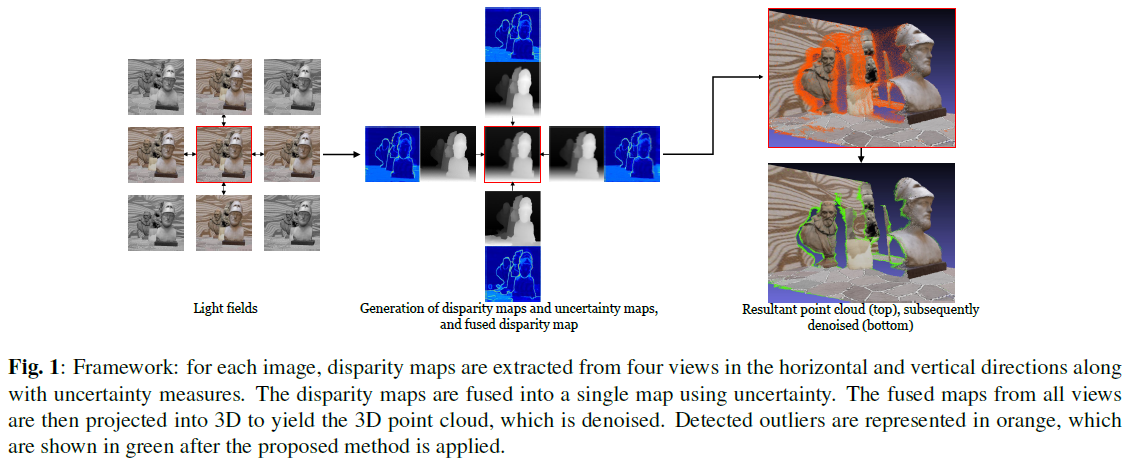

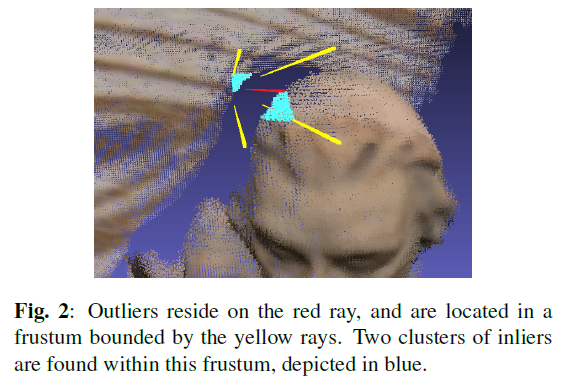

The framework of the proposed system consists of three primary methods: (1) estimation of disparity maps from four views in the horizontal and vertical directions using the ProbFlow [1] method, which also generate uncertainty maps representing the reliability of teh depth value estimates, (2) fusion of the disparity maps into a single map using uncertainty (for each view), and (3) denoising of the point cloud that is obtained by projecting the fused maps from all views into 3D. The proposed denoising method first classifies points as either inliers or outliers using the Statistical Outlier Removal (SOR) filter [2], and then uses the known camera parameters to try and determine ameliorated positions for the detected outliers usign geometric and photometric information.Please refer to the paper for more details.

|

|

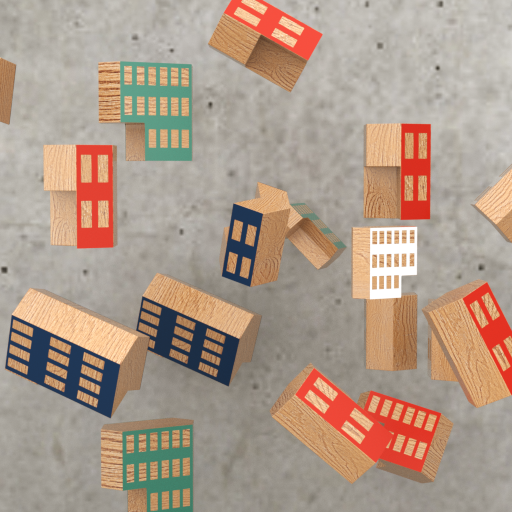

Test dataset

Five sparse light fields are considered, exhibiting significant disparities which make the disparity estimation more challenging and thus increase the likelihood of inaccurate disparity estimation. Each scene consists of a 9 x 9 grid of synthetic images at a resolution of 512 x 512 pixels.

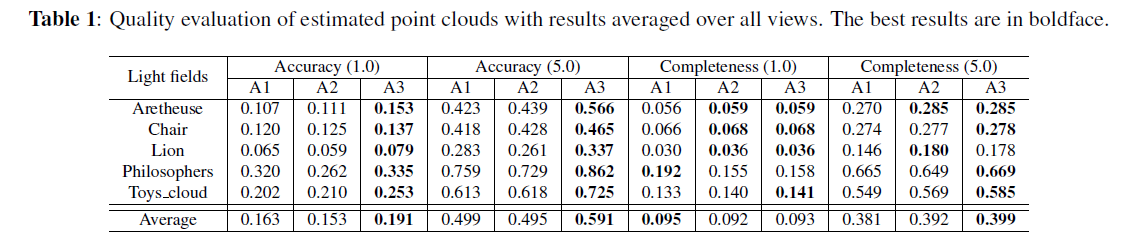

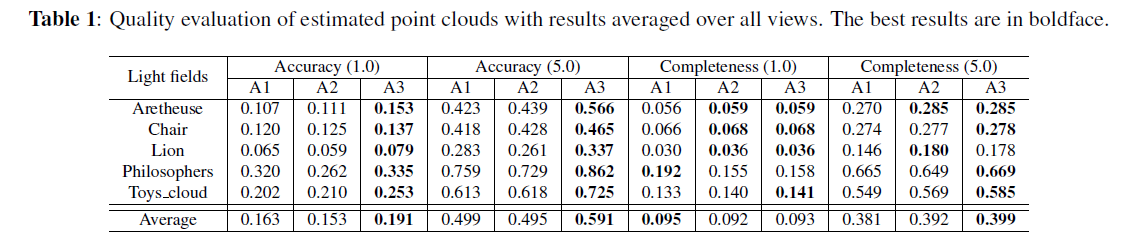

For 3D evaluation (Table 1), method A1 represents the point clouds derived from the projection of the disparity maps computed by ProbFlow [1], method A2 represents the point clouds derived from the projection of the disparity maps fused using uncertainty estimation, and method A3 represents the point cloud derived after the proposed denoising method has been applied.

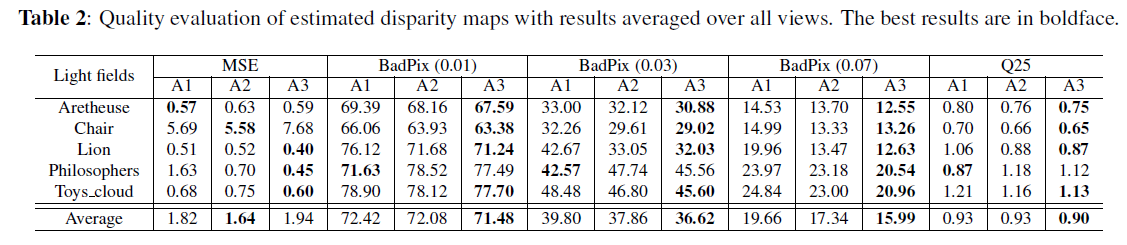

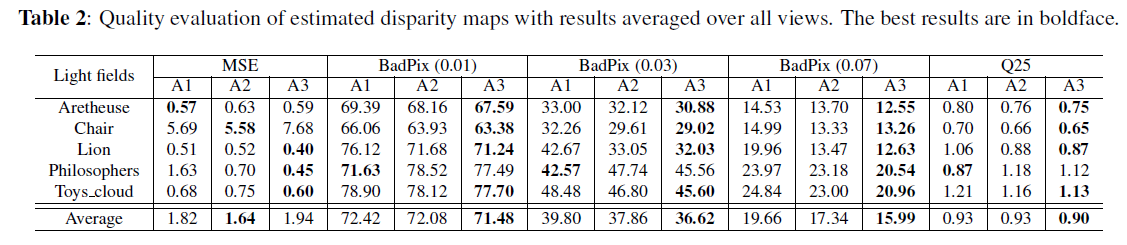

For disparity map evaluation (Table 2), methods A1 and A2 represent the disparity maps obtained from ProbFlow and the disparity maps fused using uncertainty estimation, respectively, while method A3 denotes the re-projected disparity maps from the 3D point clouds corrected with the proposed method.

Chair

Lion

Philosophers

Toys_cloud

There are several cases where the disparity is incorrectly estimated for some views, but is correctly estimated in others. One such example is the ground in the Philosophers scene, a part of which is not estimated correctly for the views in the bottom row. However, the estimation is much better in other views. Hence, the incorrect disparities in the subset of views are represented as sparse outliers that are detected by the SOR filter and then subsequently denoised with the proposed method.

In some cases, the disparities estimated by ProbFlow are incorrect in the majority of views; in such cases, it is hard to detect these erroneous points. Moreover, due to the similarity in terms of colour in several of these considered, it is sometimes challenging to ascertain the group of points to which a detected outlier should be assigned.

This work presents a first step in attempting to correct - as opposed to removing - noisy points derived from the projection of depth maps estimated from sparse light fields. The results are shown to be promising, thereby making feasible future avenues of research. Future work includes the use of a more advanced method to detect outliers, and the consideration of non-Lambertian surfaces.

[2] Radu Bogdan Rusu, Zoltan Csaba Marton, Nico Blodow, Mihai Dolha, and Michael Beetz, "Towards 3D point cloud based object maps for household environments," Robotics and Autonomous Systems, vol. 56, no. 11, pp. 927-941, 2008.

|

|

|

|

|

| Aretheuse [-13.5, 1.3] | Chair [-25.6, 3.7] | Lion [-14.4, 6.3] | Philosophers [-16.5, 11.1] | Toys_cloud [-11.0, 0.5] |

Quantitative assessment

Evaluation is performed both in the 3D domain using point clouds, and in the 2D domain by using the re-projected disparity maps. For each scene, disparity maps are obtained for the odd-numbered row and column indices of the light field grid only, such that the number of depth maps is reduced to 5x5=25. Thus, the number of computations is substantially reduced whilst still retaining the vast majority of the information in a scene (due to the high coherence among neighbouring views). Results are averaged over all of these views.For 3D evaluation (Table 1), method A1 represents the point clouds derived from the projection of the disparity maps computed by ProbFlow [1], method A2 represents the point clouds derived from the projection of the disparity maps fused using uncertainty estimation, and method A3 represents the point cloud derived after the proposed denoising method has been applied.

For disparity map evaluation (Table 2), methods A1 and A2 represent the disparity maps obtained from ProbFlow and the disparity maps fused using uncertainty estimation, respectively, while method A3 denotes the re-projected disparity maps from the 3D point clouds corrected with the proposed method.

Visual comparison

Aretheuse |

Chair

|

Lion

|

Philosophers

|

Toys_cloud

|

There are several cases where the disparity is incorrectly estimated for some views, but is correctly estimated in others. One such example is the ground in the Philosophers scene, a part of which is not estimated correctly for the views in the bottom row. However, the estimation is much better in other views. Hence, the incorrect disparities in the subset of views are represented as sparse outliers that are detected by the SOR filter and then subsequently denoised with the proposed method.

In some cases, the disparities estimated by ProbFlow are incorrect in the majority of views; in such cases, it is hard to detect these erroneous points. Moreover, due to the similarity in terms of colour in several of these considered, it is sometimes challenging to ascertain the group of points to which a detected outlier should be assigned.

This work presents a first step in attempting to correct - as opposed to removing - noisy points derived from the projection of depth maps estimated from sparse light fields. The results are shown to be promising, thereby making feasible future avenues of research. Future work includes the use of a more advanced method to detect outliers, and the consideration of non-Lambertian surfaces.

References

[1] Anne S. Wannenwetsch, Margret Keuper, and Stefan Roth, "Probflow: Joint optical flow and uncertainty estimation," in Proceedings of the Sixteenth IEEE International Conference on Computer Vision, 2017.[2] Radu Bogdan Rusu, Zoltan Csaba Marton, Nico Blodow, Mihai Dolha, and Michael Beetz, "Towards 3D point cloud based object maps for household environments," Robotics and Autonomous Systems, vol. 56, no. 11, pp. 927-941, 2008.